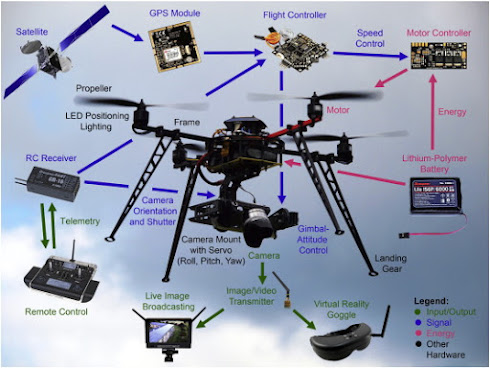

CamFly Films se fundó en 2014 para proporcionar servicios de fotografía y video mediante UAVs.

Su fundador, Serge Kouperschmidt, tiene más de 30 años de experiencia en producción de video y trabajó como camarógrafo y director de fotografía para la industria del cine en todo el mundo.

CamFly Films Ltd. tiene su sede en Londres y es un operador de UAVs certificado por la CAA (Civil Aviation Authority). Ofrece servicios profesionales de fotografía y filmación tanto en Londres como en cualquier lugar del Reino Unido.

https://www.youtube.com/watch?v=LHSDlY_IkLE

Desde magníficas filmaciones aéreas cinematográficas como la que se muestra en el enlace anterior, hasta inspecciones industriales, servicios de mapeo aéreo, estudios de tejados, e informes topográficos, proporciona servicios a medida basados en su gran experiencia de vuelo.

Entre sus actividades más demandadas cabría destacar el estudio y seguimiento de construcción de inmuebles, filmando el progreso diario de la construcción. También merecen destacarse las actividades relacionadas con la captación de imágenes térmicas, la fotogrametría, la ortofotografía, el mapeo con UAVs, la fotografía aérea 360°, así como el modelado 3D a partir de fotografías tomadas con UAVs.

Además de su PFCO (Permission for Commercial Operations) estándar, CamFly Films cuenta con un OSC (Operating Safety Case). Este permiso especialmente difícil de obtener, les permite volar legalmente a una distancia menor (10 metros de su objetivo) a una altitud mayor (188 metros) y más allá de la línea de visión. Esto hace que, a diferencia de la gran mayoría de otros operadores de UAVs, puedan operar con total eficiencia en el corazón de Londres.

CamFly Films también es una compañía de producción de video que ofrece fotografía, videografía y todos los servicios de filmación adjuntos: grabación de videos 4K impresionantes con cámaras de última generación, edición, gradación de color, adición de música, títulos, voz en off, efectos visuales, etc.